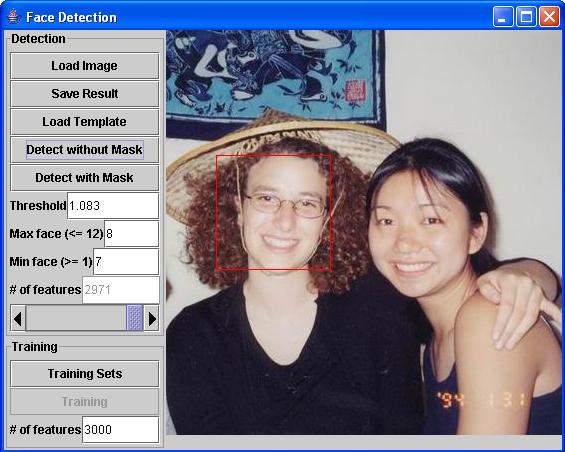

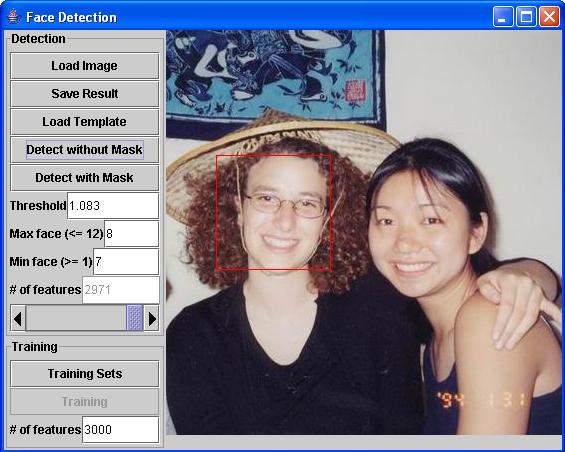

Figure 1

This class FaceDetectDialog provides a tool for face detection from grayscale and color images.

Description:

This tool allows users to train face detection classifiers and to detect faces in a still image. The algorithm to detect faces is based on Viola and Jones's work, which uses an AdaBoost algorithm. AdaBoost is a widely used boosting technique. It combines several weak classifiers into a strong classifier, and improve the overall detect rate. The information about boosting can be found at http://www.boosting.org/.

Figure 1

In order to detect faces in a still image, a user must load the files that describe the classifiers trained by the program. All classifiers' file names are specified in the header file with suffix "ada".

After loading the classifiers files, user may adjust the (1) threshold, (2) # of features,

and (3) MIN/MAX face to obtain satisfactory performance of face detection.

Threshold is the breakpoint for the strong classifier to label an image rectangle as face or non-face. The higher the threshold, the higher the false negative and the lower the false positive. The lower the threshold, the lower the false negative and the higher the false positive. Empirically, a good threshold is usually between 9 and 12.

# of features is the total number of weak classifiers that will be used in the final strong classifier. The number cannot exceed the total number of weak classifiers that are trained. Theorically, the more classifiers are

used, the lower error rate is achieved.

MIN/MAX face values determine the largest face that could be found. A user can specify the maximun and minimun values based on a priori knowledge. If an input image has not been loaded yet, the edit boxes will be disabled.

The result of face detection will be shown as a rectangle overlaid on the image frame.

Figure 1 is an example of the implemented face detection system. We show an example where depending on the parameter selection, one obtains only a subset of faces in the image. Based on our experience, the algorithm is sensitive to parameter selection.

In our implementation, color faces will be detected from the first eigenvector of an input image that is obtained using principal component analysis.

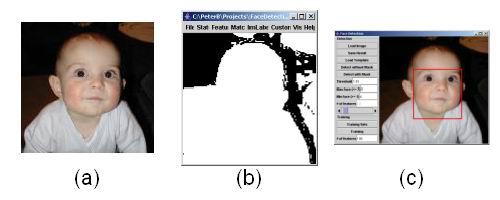

Figure 2

In order to train classifiers, a user should specify the directory storing face images and the directory storing non-face images. After specifying these directories, the classifiers will be built after pressing the Training button. This operation is usually very computationally expensive.