|

About

Wheelchair Basketball CoachingPHYSNET project: Physical Interaction Using the Internet: The Virtual Coach InterventionTele-immersive technology could significantly improve the access to knowledgeable coaches thereby improving the ability to acquire the knowledge and skills necessary to competently engage in physical activity without injuries (stopping and turning can result in soft tissue injuries to the hands (e.g., blisters), or sprained/strained fingers, and/or other upper extremity injuries even with state of the art sport wheelchairs (with waist belts and anti-tipping features) used). In full tele-immersive environment, the learning of shooting techniques and training (hook pass, figure eight dribble, one-on-one defense) will involve very limited co-action with other players to minimize the likelihood of risks, and all such will be closely supervised remotely by the coach. Tele-immersive technology can create digital clones of people and objects at multiple geographical locations and then place them into a shared virtual space in real time. This technology turns out to be very useful for citizens with limited proprioception (the sense of the relative position of neighbouring parts of the body and locomotion). The tele-immersive environments can provide spatial cues that would lead to re-gaining proprioception, as well as to supporting training of children, athletes and veterans with disabilities, and to facilitating physical interaction and communication of the persons with disabilities with their relatives and others in their homes and work places. The team at NCSA/UIUC works closely with the Disability Services at the University of Illinois (DRES) and the wheelchair basketball players. They have researched and developed a prototype tele-immersive technology that addresses the problems of (a) adaptive placement of stereo camera networks for optimal deployment; (b) robust performance under illumination changes using thermal infrared and visible spectrum imaging; and (c) quantitative understanding of the value of tele-immersive environments for citizens with limited proprioception. The figures below illustrate the technical challenges of building and deploying robust, inexpensive and portable tele-immersive systems, as well as evaluating the value of such technologies for citizens with disabilities. The experience gained from the current work focused on wheelchair basketball coaching and proprioception applications has opened the door to studying physical interactions using the Internet. We colaborate with Mike Frogley, a headcoach of men's and women's basketball teams at the University of Illinois.

Experimental Setup of the spaces. Left: Physical space at NCSA with the portable camera clusters and LCD. Right: Action in CS building (physical space) as seen remotely on the computer monitor. The two spaces were combined into virtual space (middle). How Does the System Work?

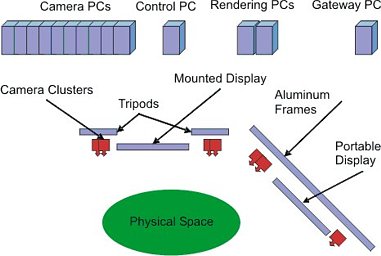

Required infrastructure for a tele-immersive system includes everything from networking to stereo camera rigs, software controlling the operation of the cameras, calibration image acquisition, synchronization, 3D reconstruction, and foreground detection.

Data volume and networking: Huge bandwidth requirements for the uncompressed data stream:

Figure 1: A sketch of our experimental setup (physical space). Approximate room size is 15ft x 15ft System operation:

Vision algorithms that perform 3D reconstruction primarily rely on knowledge of the camera positions and orientations with respect to some reference frame. Camera calibration is the process of determining the geometric and optical parameters which describe the transformation from an object in the world to it’s image detected by the camera system. These parameters are usually grouped into intrinsic and extrinsic parameters. Intrinsic parameters describe quantities that are effected by the optical and electrical components of a camera: (a) focal length, (b) pixel aspect ratio, (c) principle point, (d) lens distortion, etc. Extrinsic parameters describe the geometric position and orientation of the camera with respect to the world.

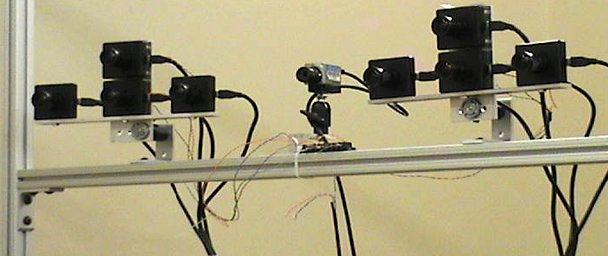

Figure 2: Image depicts two trinocular stereo cluster and one thermal infrared camera. The stereo cluster contains three grayscale and one color Dragonfly digital camera. The thermal camera is an uncooled microbolometer, which detects thermal energy in the LWIR (7.5 to 13.5 microns) wavelengths. Our calibration method is based on technique of Tomáš Svoboda and co-workers [pdf 1.7MB] with calibration time about 1-3 hours. Our goal is to automate calibration of intrinsic parameters while enabling manual calibration of extrinsic parameters. High frame rate is required for motion capture (basketball bouncing). Our frame rate is 20-24 fps on quad core machine compared to the original 2-5 fps on single and 12 fps on dual core machine. Integration of thermal and visible imagery for robust foreground detection in tele-immersive spacesThe central objects of interest in a tele-immersive system are these people, the things they jointly manipulate, and the tools they need to perform this manipulation. Certain assumptions about the image in order to reduce the complexity of the detection problem make it easier to find objects of interest. Typical assumptions are: background materials have non-reflective surfaces, intensity differential between background and foreground objects, scene illumination is constant, foreground object is uniformly illuminated by diffuse lighting, scene lighting exhibits a constant power spectrum. Unfortunately, these assumptions often break down in practice. In particular, five characteristics of real scenes cause problems in the current TEEVE system: changing illumination, moving foreground objects (causing shadows), moving background objects, lack of contrast between foreground and background objects, and lack of contrast between different foreground objects.

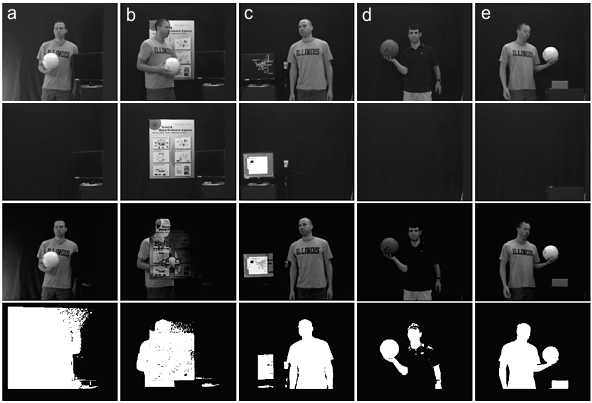

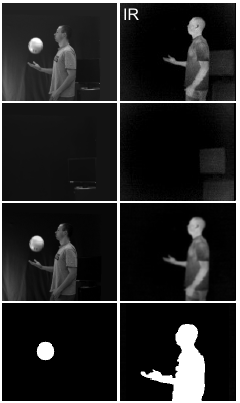

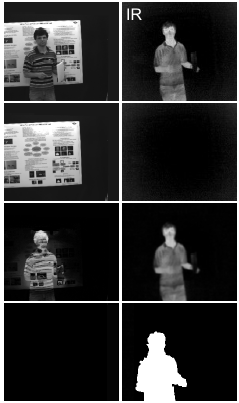

Figure 3: Problems in object detection: Top images are the current frames, below are static backgrounds taken before image acquisition, current minus static background is the third from top and finally bottom images show the foreground detection. (a) Changing illumination: In this sequence, a small lamp was turned on before (current) acquisition, causing large portions of the background to be seen as foreground. (b) Moving foreground objects: Moving foregrounds cast shadows and change the general scattering environment of the scene. In this sequence, background objects are mistaken as foreground. (c) Moving background objects: The computer display (a background object) has changed appearance between the acquisition of the background and the current frame. Thus, the current system treats these changed pixels as foreground. (d) Low contrast between foreground and background: The visible modality has a difficulty classifying foreground objects that have intensities or colors (dark shirt here) that closely match the background model. (e) Low contrast between different foreground objects: The current TEEVE system cannot distinguish between the object of interest (the ball), and a rectangular object, thus classifying them both as foreground. Visual and thermal cameras provide fundamentally different information. Where visual cameras primarily measure how materials reflect light, thermal cameras primarily measure temperature. These differences of content mean that a combination of visual and infrared images can provide more information about a scene than either modality used alone.

Figure 4: Left. TEEVE system based on visible spectrum imaging only. Right. Tele-immersive system based on visible and thermal IR spectrum imaging and information fusion. There are three types of benefits that IR imaging can provide tele-immersive systems: (1) IR can enhance image processing tasks at a low level (e.g. human foreground detection), (2) IR can allow tele-immersion users to perceive temperature in the virtual environment using visual or tactile feedback, and (3) IR can fundamentally enhance material and object classification. Final Results

Figure 5: Left: Visible and infrared camera (marked IR) frames showing the changing illumination result similar to the figure 3a. Middle: Moving foreground scenario similar to the fig.3b. Top images are the current frames, below are static backgrounds taken before image acquisition, current minus static background is the third from top and finally bottom images show the foreground detection. Right: Low contrast between foreground/background experimental results (figure 3d). Fusion with thermal information is able to fill in the missing information. Finally, figure 6 shows the combined results. In the changing illumination experimental results

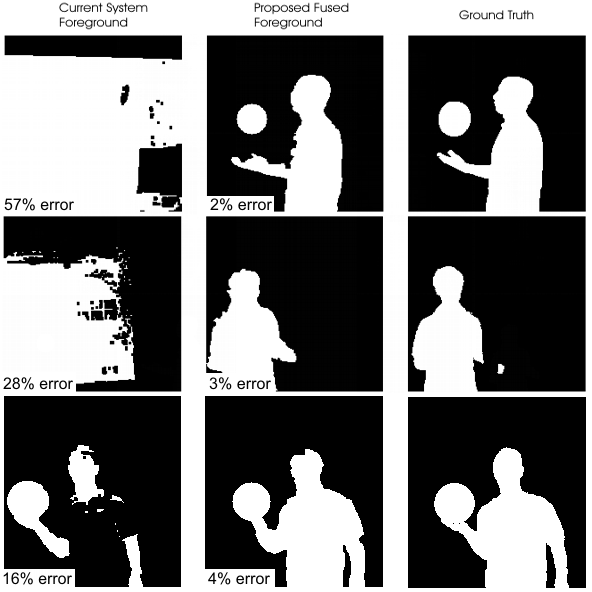

(figure 3a and 5 left)

the thermal imagery is not sensitive to the lighitng change and is able to detect the person in the scene. A

lso, because our inanimate object detection emphasizes higher level features, in this case shape, the ball is

correctly identified as an object of interest.

Figure 6: Top: Changing illumination results. Three top pictures show our results of visible and IR fusion (compared to the existing system performance) in the presence of the Changing illumination problem described in figures 3a and 5 left). Middle: Results in the presence of the Moving foreground object results (figures 3b and 5 middle). Bottom: Improved detection of the foreground object with the thermal information. (figures 3d and 5 right)

Table of quantitative results, comparing performance of current tele-immersive system and our proposed fusion algorithm. "F Neg" represents the number of pixels that were incorrectly classified as background (i.e. the false negative detections). "F Pos" represents the number of pixels that were incorrectly classified as foreground (i.e. the false positive detections). The "Total" is the sum of these two pixel counts, and the percent error represents the percentage of the image that was misclassified. Summary

Figure below presents an example of a problem related to foreground detection approached by using the fusion of Thermal Infrared (IR) and Visible images. Through exploring the fusion of multiple sensor modalities in the context of tele-immersive systems, we can enhance computational efficiency, user immersive experience, and automatic scene understanding.

Figure 7: This set of images (from a single time step) demonstrates a particular challenging scenario that involves a dynamic scene in both the visible and thermal wavelengths. Top row: visible wavelength background image; visible frame, difference between current visible frame and background. Bottom row: thermal background, current thermal frame, simple thresholding and connected components in thermal frame. This scene contains a monitor, which is showing a dynamic video, and a warm cup of water which is cooling over time. Note that the monitor can also change temperature over time if it is turned off, or hibernating. In this challenging case, model-based classification will be able to tell the difference between the human subject and other objects. People, Publications, Presentations

Team members

Former members

FundingThe funding was provided by National Science Foundation IIS 07-03756 grant 490630 and NCSA core grant. Publications

|