University of Illinois

University of Illinoisat Urbana-Champaign

|

About

Tele-immersion technology utilizes arrays of cameras and microphones to capture 3D scenes in real time. By having this setup at multiple remote sites and streaming the 3D data between the various locations one can provide users with a level of interaction currently not attainable by conventional 2D systems. In a tele-immserive system users, represented as 3D data, are merged into a common virtual environment where they can interact with other remote users and/or interact with shared non-existent virtual objects placed in the environment with them. The main goal of our work is to design and build a portable, re-configurable and inexpensive tele-immersive system from commercial off-the-shelf components. Potential applications for tele-immersion include:

Hardware

Our tele-immersive system has moved away from the self constructed trinocular stereo cameras clusters we once used. These systems required that each cluster of cameras (3 gray scale, and one color) had it's own dedicated high-end computer to perform the image rectification, pixel correlation, and triangulation needed to recover depth values from the sets of images produced. The current system instead uses commercial 3D stereo cameras that have become available on the market during recent years. By utilizing these compact solutions we are able to elminate much the of the bulk associated with the system, thus making it much more portable. The cameras we use, the TYZX DeepSea G2, cameras are shown below.

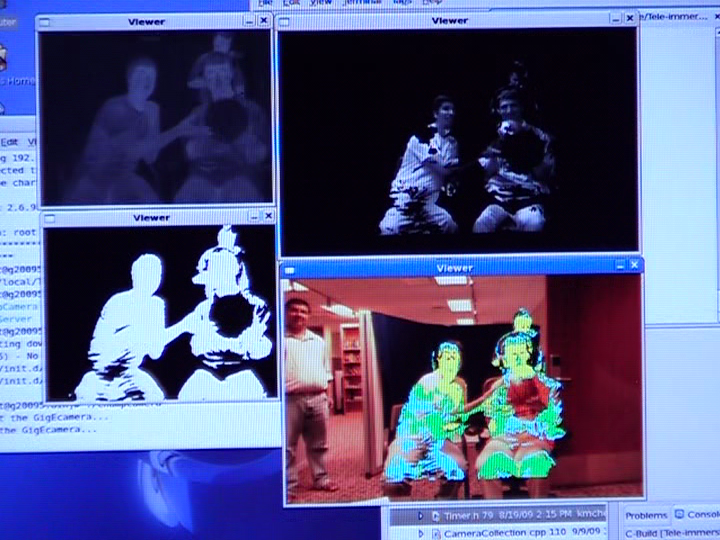

Left: A custom built trinocular stereo cluster made of 3 Point Grey gray scale cameras and 1 color camera. Middle: A TYZX DeepSea G2 stereo network camera with on board CPU. Right: A G2 camera with side mounted FLIR Photon thermal infrared camera. In addition to the G2 cameras which produce 3D data for a site we utilize thermal infrared cameras. The IR cameras are registered with a particular 3D camera and used to clean up much of the background clutter in the data that would otherwise be difficult to remove. Human beings, being warm blooding mammals, generate much more heat than other ambient objects. The registered IR cameras can take advantage of this fact in order to seperate the objects of interest, humans, from everything else. To display the 3D content to users we utilize large 52 inch LCD displays. In order to make the system as portable as possible we mount several of the 3D cameras to the TV cart holding the displays.

The 52 inch displays used to view the virtual environment. Through a PC hooked up to the displays a user can rotate and zoom in/out of the scene via the mouse and keyboard. Below we show one of our tele-immersive labs containing 3 LCD displays and 6 G2 3D cameras. In this example the system is setup for proprioception experiments with wheel chair basketball players.

Left: A room setup to be a tele-immersive site for proprioception experiments with wheelchair basketball players. Right: Bi-modal color markers used to represent virtual walls that must be avoided by subjects. The bi-modal color markers on the floor provide convenient targets that can easily be tracked by software through a ceiling mounted camera and used to measure the proximity of subjects from virtual walls represented by the intersection of the two colors.

Left: The ceiling mounted network camera used to monitor activity below. Right: A screenshot of the software used to track the bi-modal markers seen in the ceiling camera and plot the distance of a subject from the nearest virtual wall (represented by the bi-modal markers). Bi-modal markers and ceiling mounted cameras are only one means of allowing people to interact with a virtual environment. We are currently exploring various other techniques including: the use of Wii motes, personal microphones, head mounted displays, and haptic feedback devices.

Seemless human/computer interaction is crucial in order to provide an intuitive and effortless tele-immersive experience. The question is, what is the best way to do this? We are currently investigating various methods... Other equipment required to setup a tele-immersive site includes color charts to insure that each camera senses colors in the same way and calibration devices to spatially register the multiple cameras into a common coordinate system.

Left: A flashlight with an incadescent bulb, detectable by both visible spectrum color/gray scale cameras and thermal infrared cameras. This common flashlight is used to calibrate the mutliple cameras using the method of Svoboda et al. [PRESENCE: Teleoperators and Virtual Environments, 2005]. Right: A Gretag Macbeth color checker chart used to insure all cameras perceive colors similarly. Demos

A demonstration of the tele-immersive system setup for use with proprioception experiments with wheelchair basketball players.

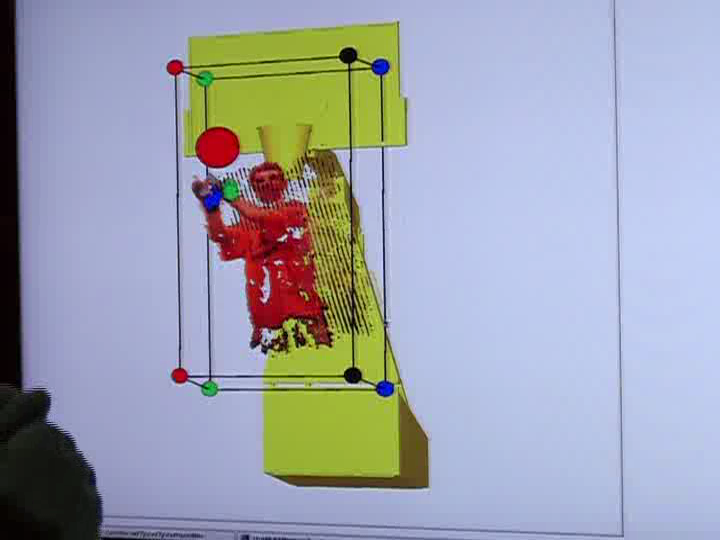

Left: A demonstration of the thermal IR camera data being integrated with the 3D camera data in real time to perform background removal. Right: A demonstration of the tele-immersive system being used by two users to manipulate a virtual ball into a virutal basketball hoop. The two users use colored gloves, that are easily detected by the system, to interact with the scene. Applications

Remote Dance, TEEVEJoint performance of dancers from two remote sites, UC Berkeley and University of Illinois at Urbana-Champaign. The live performance took place on Dec 8, 2006:

Snapshots of the real-time interaction between the dancers from UIUC and UC Berkeley in the tele- immersive environment. Wheelchair Basketball CoachingPHYSNET Project: Physical Interaction Using the Internet - The Virtual Coach InterventionIn a tele-immersive environment the learning of shooting and other techniques (e.g. hook pass, figure eight dribble, one-on-one defense) allows for minimal physical interaction with other players, minimizing the likelihood of injuries. In addition all players, regardless of location, can be closely supervised by a coach. For this work we colaborate with Mike Frogley, a headcoach of men's and women's basketball teams at the University of Illinois.

Tele-immersion studio at NCSA connected to another such studio at the CS department of the UIUC. The shared virtual environment allows the two basketball players to interact from their differing locations. Groupscope: Instrumenting Research on Interaction Networks in Complex Social ContextsGroupscope brings together 11 investigators from eight campus units and five colleges who propose to develop technologies to understand social interactions with a fidelity that enables break through social research. The project will employ advanced computing applications and technologies to capture, annotate, and analyze group interaction networks in complex social contexts. These technologies will address a major challenge faced by researchers studying the socio-economic consequences of decisions made by individuals acting in groups - the inability to collect high resolution, high quality, high volume interaction network data necessary to test and extend the science of group interaction networks. This complex methodology will implement sophisticated never-before-used models for interaction analysis which will enable researchers to contribute significant theoretical insights and practical applications in diverse yet socially significant areas such as disaster response and adolescent deviancy. |