University of Illinois

University of Illinoisat Urbana-Champaign

|

About

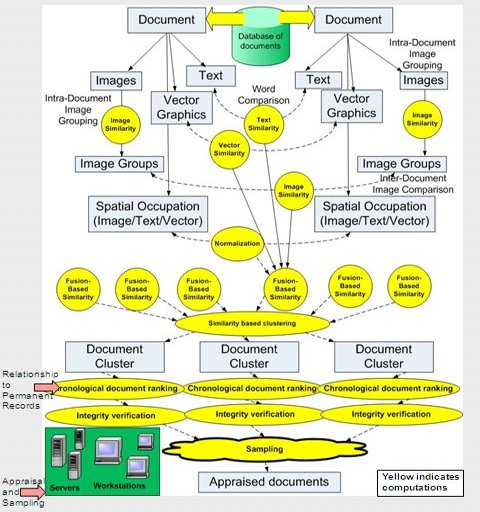

Exploratory Document Appraisal FrameworkThe overall motivation is to provide support for answering appraisal criteria related to document relationships, chronological order of information, storage require-ments and incorporation of preservation constraints (e.g., storage cost). The volume of contemporary documents and the number embedded object types is steadily growing. The project addresses the problems of document comparison by analyzing similarity of primitives contained in each document. Image, text and vector graphics is extracted from PDF documents, each segment characterictics is computed and fused. The grouping of similar documents, integrity verifications, and sampling is done using similarity metrics computed for all PDF components.

A methodology of document appraisal. Aditionally, word counting operations using cluster computing and the Map and Reduce programming paradigm are performed. The statistical descriptors of documents can be extracted by processing subsets of data in parallel since the operations are commutative and associative, and the data can be partitioned into equal size chunks. Objectives: Design a methodology, algorithms and a framework for conducting comprehensive document appraisals by (a) enabling exploratory document analyses and integrity/authenticity verification, (b) supporting automation of appraisal analyses and (c) evaluating computational and storage requirements of computer-assisted appraisal processes. Proposed approach is based on decomposition of the series of appraisal criteria into a set of focused analyses:

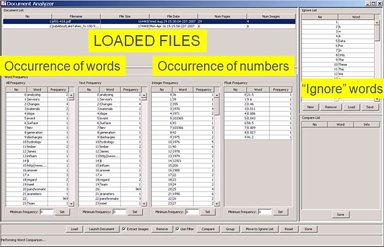

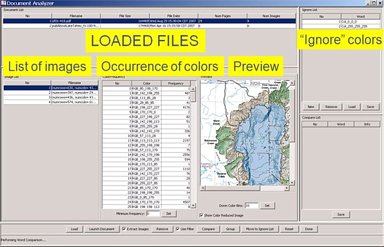

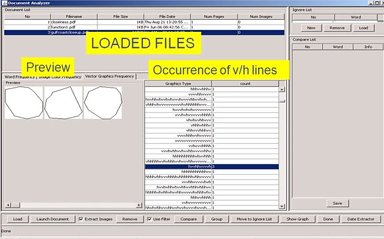

The methodology is described by starting with pair-wise comparisons of text, image (raster) and vector graphics components, computing their weights, establishing the group relationship s to permanent records, and focusing on integrity verification and sampling. The figures below show multiple views of the PDF content as needed for appraisal. While the Adobe PDF Reader would present the view of the document layout per page, other views might be needed for understanding the characteristics of PDF components. We have built a visual representation of the comprehensive document comparisons using text, image and vector graphics objects.

Viewing PDF content using Adobe PDF Reader. Document To Learn (Doc2Learn) framework compares PDFs and gives users the ability to easily look at what content similarity there is between multiple documents. It statistically compares the frequency of occurrence of 3 primitive data types: Words, Colors in bitmap images and Lines in vector graphics. The comparison is used to generate a similarity graph.

Viewing characteristics of PDF content using the developed framework, Document To Learn (Doc2learn).

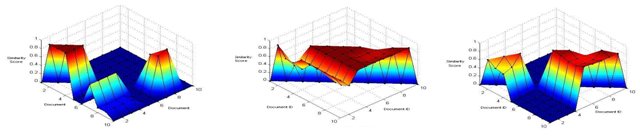

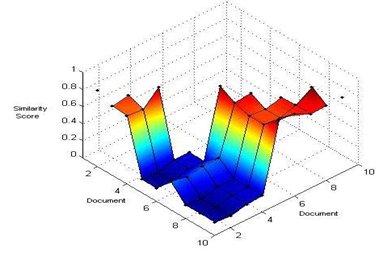

Top: Text primitives shown as word histograms and Image PDF primitves as groups of colors. ExampleA set of 10 PDF documents was evaluated for overall similarity. The pair-wise document similarity plots (below) show dramatically different similarity obtained using text-only (left figure), image-only (middle) and vector graphics-only (right) components and hence leading to higher accuracy when combined.

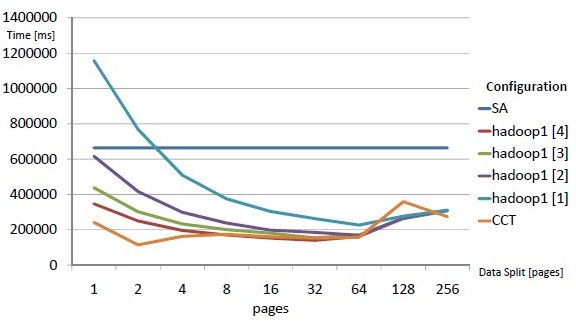

Three pair-wise document plots for text, image/raster and vector based components. Similarity plot (left) using all weighted document components. Red color indicates higher similarity between for example document 1 and 2. Identities (score = 1) for the same document are not shown. Two groups of similar documents based on the combined documents similarity plot have been identified. Computational Scalability Using HadoopThe counting operation is computationally intensive especially for images since each pixel is counted as if it was a word. While one can find about 900 words per page, a relative small image of the size 209x188 is equivalent to 44 pages of text. Our goal is to count the occurrence of words per page, of colors in each image and of vector graphics elements in the document. Hadoop Map/Reduce software platform has been used for large-scale document text processing. Hadoop‐based applications run on large clusters (thousands of nodes) of commodity hardware in a reliable, fault‐tolerant manner.

The graph shows preliminary processing times of 10MB NARA report on cluster configurations with a different number of machines. Configurations of Hadoop Clusters: Two different clusters have been used. The first cluster, hadoop1, is located at NCSA and consisted of four identical machines. It is capable of 20 Map tasks and 4 Reduce tasks in parallel. The second cluster, Illinois Cloud Computing Testbed (CCT), is in the CS department at the University of Illinois and consists of 64 identical machines. The CCT is capable of 384 Map tasks and 128 Reduce tasks in parallel. We used experimental report provided by NARA. The report is 10MB (10,330,897 bytes) and contains 248 pages with 179,187 words, 236 images (an average image size is 209x188 = 16,655,776 pixels), and 30,924 vector graphics objects. We compared the time it took to extract the occurrence statistics from the Columbia report using Hadoop compared to using a stand alone application (SA). People, Publications, Presentations

Team members

Publications and presentations

http://www.archives.gov/era/research/research-publications.html

|